Shadow libraries and AI training

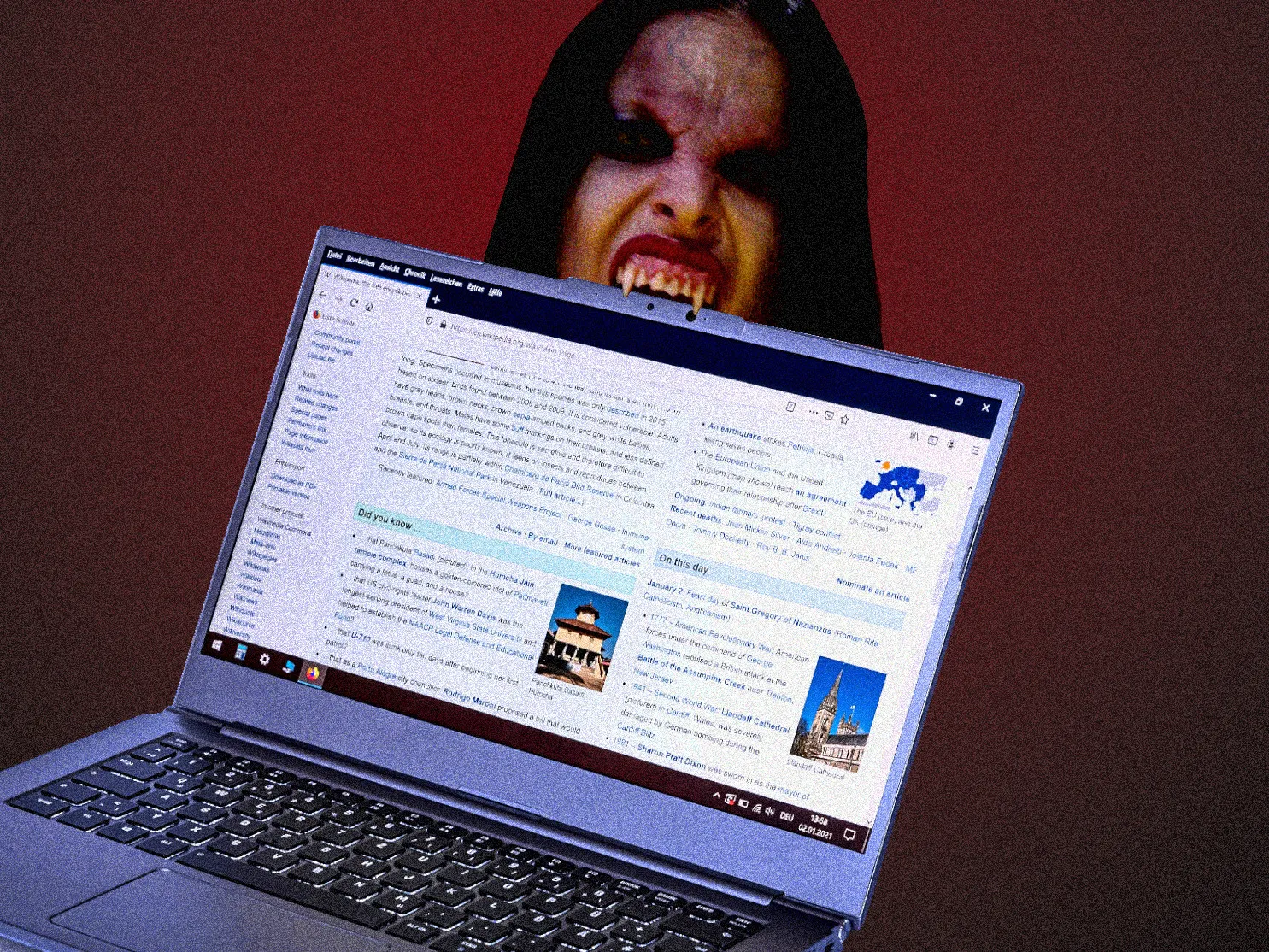

For those just learning about LibGen because of the reporting on Meta and other companies training LLMs on pirated books, I’d highly recommend the book Shadow Libraries: Access to Knowledge in Global Higher Education (open access).

I just read it while working on the Wikipedia article about shadow libraries, and it’s a fascinating history.

I fear the already fraught conversations about shadow libraries will take a turn for the worse now that they’re overlapping with the incredibly fraught conversations about AI training.